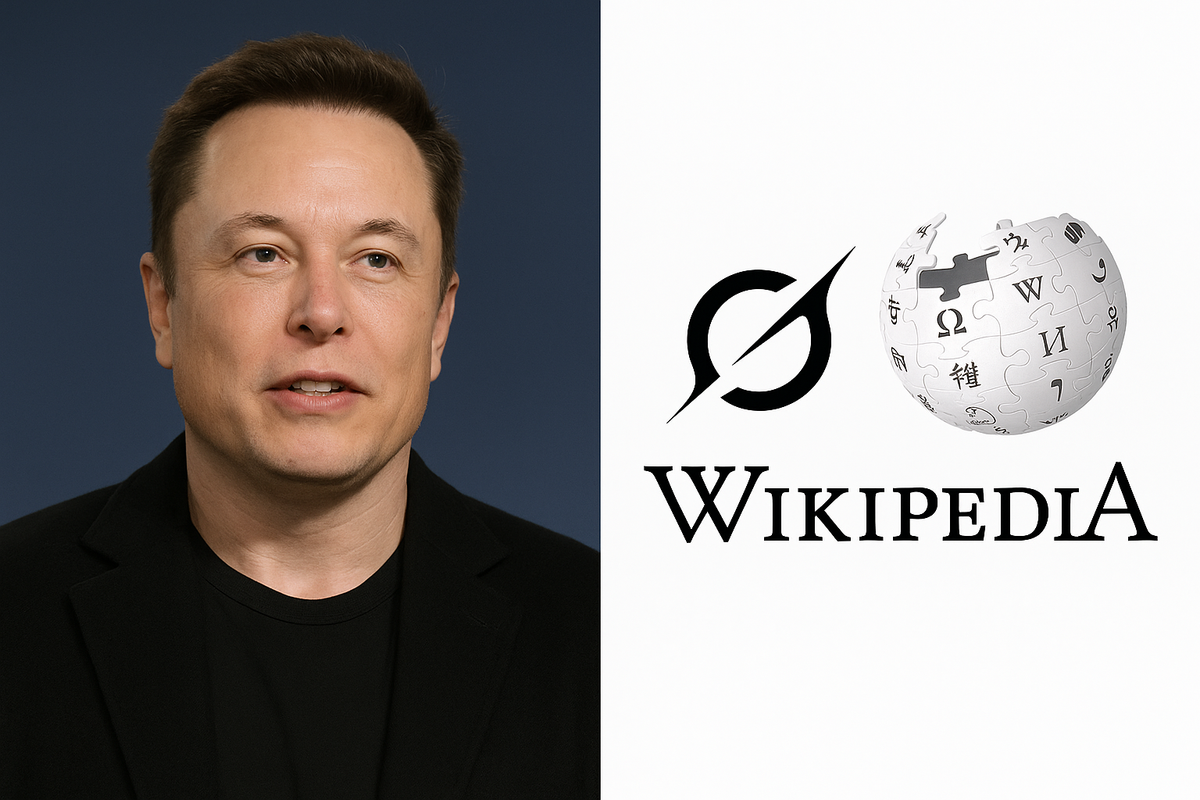

What Elon Musk’s Grok / “Grokipedia” talk about Wikipedia means — and what to expect

Elon Musk’s AI project Grok (from his xAI company) and his recent public talk about launching a new AI-driven encyclopedia — variously called Grokipedia in press reports — has sparked a lot of questions: is this a useful alternative to Wikipedia? Is it a threat to public knowledge? How would it work? Below I’ll summarize the facts reported so far, explain the likely models at play, and list the realistic upside and downside to expect in the months ahead.

Short version (TL;DR)

- Grok is xAI’s conversational AI/chatbot that has been developed and iterated rapidly (Grok 2 → Grok 3 and related releases). Wikipedia

- Elon Musk and outlets have said xAI plans a product called Grokipedia — an AI-powered knowledge platform pitched as a rival/alternative to Wikipedia; it’s being positioned as a public-facing encyclopedia that leans on Grok’s capabilities. Early reporting describes an “early beta” and community-like features. The Times of India+1

- Expect a mix of experimental features (AI-curated or AI-drafted articles, search + explain flows) plus immediate controversies over reliability, editorial control, and bias. Experts say it will face a steep uphill battle to match Wikipedia’s scale and governance. Northeastern Global News+1

What Grok already is (brief factual background)

Grok is the conversational AI product from xAI, the company Musk helped found. Over 2024–2025 xAI released successive Grok models and expanded capabilities (image understanding, PDF uploads, web search integrations, and major new model releases like Grok-3). xAI has also rolled out apps and APIs for developers. Those engineering steps are real and documented. Wikipedia

What Musk (and reporting) say about “Grokipedia”

Multiple reports and Musk’s posts indicate xAI is building an AI-powered encyclopedia branded around Grok — sometimes called Grokipedia in headlines — intended to offer an alternative to Wikipedia. Coverage says the platform will combine AI summarization/search with some community contributions (the descriptions mention Community Notes–style formats), and that an early beta/version was expected soon after the announcements. These are reported claims from Musk/xAI coverage, not an established, stable product yet. The Times of India+1

How it might work (likely mechanics)

Based on how other AI knowledge projects operate and on what Grok already does, a plausible design includes:

- AI-generated or AI-summarized articles that Grok writes from web sources and internal datasets.

- A human-in-the-loop layer where users, volunteers or moderators can review, correct, annotate or dispute AI outputs (the “community notes” language in reporting suggests this).

- Search + explain: users ask Grok questions and receive a written explanation plus citations (or links) to source material.

- APIs and integrations so third parties or developers can embed Grokipedia outputs.

These are reasonable inferences based on reported features of Grok and descriptions of the planned encyclopedia. Wikipedia+1

Potential upsides (what could be good)

- Faster, conversational access to summarized knowledge. For many users, an AI that explains topics in plain language with source snippets is convenient. Wikipedia

- Novel formats: Grok could blend multimedia answers, images, or reasoning modes that a static encyclopedia can’t. Wikipedia

- Competition may push improvements: pressure from new entrants sometimes motivates legacy projects (including Wikipedia) to innovate around UX, transparency, and tooling.

Real and immediate downsides to expect

- Accuracy and sourcing problems. LLMs hallucinate; unless strict source-tracking and verification are enforced, AI-written encyclopedia entries risk factual errors and misleading summaries. Experts warn AI-first encyclopedias face a difficult reliability test compared with Wikipedia’s long-established citation and editorial systems. Northeastern Global News+1

- Editorial transparency and governance. Wikipedia’s strength is an open volunteer community, policies and an administrative structure. A corporate-run encyclopedia raises questions: Who edits? Who decides disputes? How are conflicts of interest handled? Experts emphasize governance will be critical. Northeastern Global News

- Potential bias and trust concerns. Musk has publicly criticized Wikipedia for perceived bias; his critics worry a rival project could reflect different editorial slants or reduced checks on political content. Expect debates over perceived neutrality quickly. New York Post

- Legal / copyright questions. Large language models are trained on vast text corpora; any platform that republishes or synthesizes copyrighted material can raise IP issues and source-attribution questions. (This is a common, active debate across AI projects.) Wikipedia

What to watch for next (concrete signals)

- Beta release details: Is Grokipedia publicly available? Is there a clear correction and provenance policy? Early product docs and release notes will reveal design choices. The Times of India

- Citations & provenance: Does every AI-generated claim include verifiable links to sources? Are sources primary and trustworthy? Northeastern Global News

- Community participation model: Is there a volunteer editor base, or is editorial control centralized at xAI? The answer will shape the platform’s credibility. Northeastern Global News

- Independent audits / transparency reports: Third-party audits of training data, moderation policies and error rates would improve trust. Watch for reputable audits or academic analyses.

- Response from Wikimedia/Wikipedia: Will the Wikimedia community make technical or policy changes in response? Expect commentary and, possibly, collaborative or defensive moves.

Bottom line

The idea of an AI-first encyclopedia powered by Grok is plausible and actively discussed in media reports — but it’s an early-stage product concept rather than a finished replacement for Wikipedia. Grokipedia (as reported) promises convenience and new UX, but the core problems of accuracy, source provenance, governance and bias remain hard technical and social challenges that any credible encyclopedia must solve. If xAI addresses those convincingly (transparency, clear edit processes, strong sourcing), Grokipedia could become a useful complement to existing reference projects. If not, it risks becoming another fast-growing but error-prone AI content source that fuels controversy rather than trusted knowledge.